Kubernetes Operators - example

Walkthrough of creating a basic Kubernetes Operator with the Operator framework from start to finish, covering certain areas about general functionality in detail.

If you haven’t checked the article about Kubernetes Operators, be sure to check it out in order to have a better understanding on what Operators are and how they work.

Requirements

- Go - Main programming language of Kubernetes

- operator-sdk - Operator framework

- Docker

- Kubernetes through kind - for easier testing by running in ephemeral k8s cluster

As always, we need to start with a problem and how to solve it before doing any planning, for our case let it be simple:

“I want to have an operator to manage my Nginx deployment and monitor its health automatically for me.”

The outcome requirements from this are very simple, we want the Operator to:

- Deploy an application

- Monitor its health

- Restart it if it fails

Generally, the flow for designing an operator, that we will be doing next, consists of the following steps:

- Describe a problem

- Designing an API and CRD (CustomResourceDefinition)

- Working with other resources

- Designing a target reconciliation loop

- Handling upgrades and downgrades

- Using failure reporting

When developing an operator, it’s generally good to create the operator as simple as possible and iterate over it over time, evolving it naturally. You don’t need to fulfill all 5 levels of the capability model, it’s completely fine to have it on level 1, otherwise there would be no level 1 capability model.

Designing the API and CRD 📄

The Operator’s API is how it will be represented in Kubernetes, and it is directly translated as a blueprint for Kubernetes CRD. To put it simply, it’s the definition of the configuration of the operator.

We are creating a CRD (Custom Resource Definition) in Kubernetes, so we need to first register it with it before creating resources from it.

Example CRD

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

name: myoperator.operator.example.com

spec:

group: operator.example.com

names:

kind: MyOperator

listKind: MyOperatorList

plural: myoperators

singular: myoperator

scope: Namespaced

versions:

- name: v1alpha1

schema:

openAPIV3Schema:

id: ""

# ...more fields, but omitting due to being quite large

served: true

storage: true

subresources:

status: {}

status:

acceptedNames:

kind: ""

plural: ""

conditions: []

storedVersions: []

Most important fields from above are:

- apiVersion - Defines which versions this resource and kind that tells Kubernetes that this is a CRD

- names - Section that defines names by which we can reference these custom resources, for example when listing the in Kubernetes with

kubectl get podswe would usekubectl get myoperators - scope - Defines the existence of the operator to be in either Clustered or Namespaced level

- versions - Defines schemas evolution of our operator for backwards compatibility purpose

- subresources and status - Related, as they define a status field for the Operator to report its current state

Our Operator should be fairly simple and as we are working with Nginx, let’s just define the following configurables:

- port - Port on which to expose the Nginx in our cluster

- replicas - Number of replicas to scale to (a bit of redundant, but anyway let’s go with it)

- forceRedeploy - This field we use when we want to trigger a manual re-deployment without changing any configuration, so it can be whatever value

Based on this, we now know that our resource will look like this

apiVersion: v1alphav1

kind: NginxOperator

metadata:

name: my-instance

spec:

port: 8080

replicas: 1

status:

# ... TBD

We can then create the resource by using that file

kubectl create -f my-instance.yaml

and when created, we can retrieve the object with the following command

kubectl get nginxoperators my-instance

Initialize the project boilerplate 📚

mkdir nginx-operator

# inside the directory initialize

operator-sdk init --domain example.com --repo github.com/example/nginx-operator

This will generate the following directories and files:

- config - Directory that holds YAML definitions of Operator resources

- hack - Directory that is used by many projects to hold various hack scripts like to generate or verify changes

- .dockerignore / .gitignore - Declarative lists of files to be ignored by Docker builds and Git, respectively

- Dockerfile - Container image build definitions

- Makefile - Operator build definitions

- PROJECT - File used by Kubebuilder to hold project config information

- go.mod / go.sum - Dependency management lists for go mod

- cmd/main.go - The entry point file for the Operator’s main functional code

readyz and healthz endpoints.To generate the API boilerplate and the controller, run the following:

operator-sdk create api --group operator --version v1alpha1 --kind NginxOperator --resource --controller

This generated the following directories and files using the configuration we provided:

- api/ - Directory with API types / spec

- internal/controller/ - Directory with boilerplate controller code

- cmd/main.go - Updated

main.goto use the generated controller

For now, we are only interested in the api/v1alpha1/nginxoperator_types.go file, where we set the API specification.

Other files are not relevant much.

zz_generated* is typically generated file that we shouldn’t

touch it and the groupversion_info.go is used to define package variable for this API to instruct client how to

handle objects.In this file, the most important structs for us are:

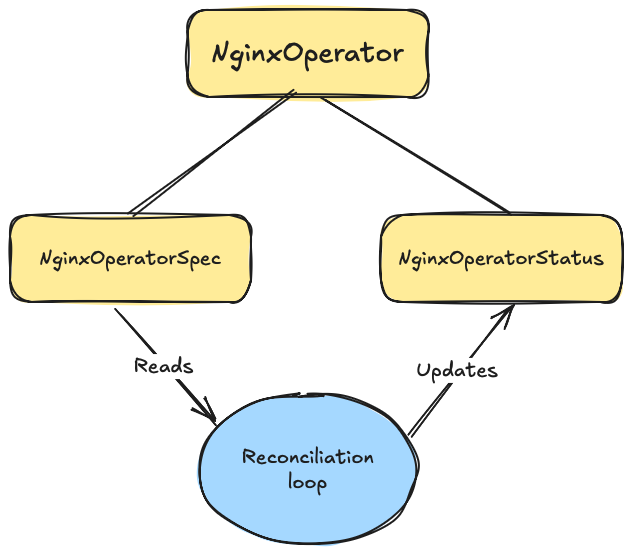

- NginxOperator - Schema of the Nginx operator API (This is our yaml CRD schema as a struct)

- NginxOperatorSpec - Defines the desired state of the operator

- NginxOperatorStatus - Defines the observed state of the operator

How Operator interacts with these structs can be seen in the following picture:

The Operator loop always checks the Spec to know what is the desired state, then checks the resources it watches if the state is correct and fixes it if it isn’t. The Status is used for updating on the state of the controller / Operator and will talke more about it in the failure reporting - status updates part.

It’s also good to be familiar with the Kubernetes API spec convention1.

To define our schema and add port, replicas and forceDeploy we need to update NginxOperatorSpec struct.

// NginxOperatorSpec defines the desired state of NginxOperator

type NginxOperatorSpec struct {

// Port on which the Nginx Pod will be exposed

Port *int32 `json:"port,omitempty"`

// Replicas is the number of deployment replicas we wish to scale

Replicas *int32 `json:"replicas,omitempty"`

// ForceDeploy deploy for manual re-deployment of our Operator, can be any value

ForceDeploy *string `json:"forceDeploy,omitempty"`

}

Comments can be useful as they will be also generated later on in the schema and help aid users. The json annotations in the code represent how these fields will be represented in json/yaml files.

Alternatively, we can use tags like the following to tell the code generator to add validation and default values to the fields:

type NginxOperatorSpec struct {

// Port on which the Nginx Pod will be exposed

// +kubebuilder:default=8080

// +kubebuilder:validation:Required

Port int `json:"port"`

}

Now our port will have a default value of 8080 and cannot be empty. Notice that it’s not a pointer value anymore and that we don’t have the omitempty annotation.

When changing the Spec it’s good to execute command to re-generate code and manifests based on that spec by running

make generate. This will update the previously mentioned file zz_generated.deepcopy.go file.

Creating/updating manifests

Now, lets we need to generate manifests like our CRD, ClusterRole and matching ServiceAccount for those.

We run make manifest command that will generate us our CRD and our ClusterRole the following:

config/crd/bases/operator.example.com_nginxoperators.yaml

---

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

annotations:

controller-gen.kubebuilder.io/version: v0.15.0

name: nginxoperators.operator.example.com

spec:

group: operator.example.com

names:

kind: NginxOperator

listKind: NginxOperatorList

plural: nginxoperators

singular: nginxoperator

scope: Namespaced

versions:

- name: v1alpha1

schema:

openAPIV3Schema:

description: NginxOperator is the Schema for the nginxoperators API

properties:

apiVersion:

description: |-

APIVersion defines the versioned schema of this representation of an object.

Servers should convert recognized schemas to the latest internal value, and

may reject unrecognized values.

More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources

type: string

kind:

description: |-

Kind is a string value representing the REST resource this object represents.

Servers may infer this from the endpoint the client submits requests to.

Cannot be updated.

In CamelCase.

More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds

type: string

metadata:

type: object

spec:

description: NginxOperatorSpec defines the desired state of NginxOperator

properties:

forceDeploy:

description: ForceDeploy deploy for manual re-deployment of our Operator,

can be any value

type: string

port:

description: Port on which the Nginx Pod will be exposed

format: int32

type: integer

replicas:

description: Replicas is the number of deployment replicas we wish

to scale

format: int32

type: integer

type: object

status:

description: NginxOperatorStatus defines the observed state of NginxOperator

type: object

type: object

served: true

storage: true

subresources:

status: {}

config/rbac/role.yaml

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: manager-role

rules:

- apiGroups:

- operator.example.com

resources:

- nginxoperators

verbs:

- create

- delete

- get

- list

- patch

- update

- watch

- apiGroups:

- operator.example.com

resources:

- nginxoperators/finalizers

verbs:

- update

- apiGroups:

- operator.example.com

resources:

- nginxoperators/status

verbs:

- get

- patch

- update

The ClusterRole is our Role-Based Access Control (RBAC) which is bound via Operator’s ServiceAccount and defines all allowed actions our operator can make against its Custom Resource (CR).

The role.yaml above sets access control on the following resources

- nginxoperators

- nginxoperators/finalizers

- nginxoperators/status

As you can see, there is a lot of generate configuration above, that’s why these generators are so helpful and safe, as we don’t accidentally miss some correlation in between during development.

Additional deployment manifest

Since in our logic we will be creating a new Deployment when it does not exist, we need to have a default configuration for that deployment. To make it easier to manage we can create a yaml file definition of it and include it in the operator.

Create the following directory structure

assets/

├─ assets.go

├─ manifests/

│ ├─ nginx_deployment.yaml

here your nginx_deployment.yaml will look like this

apiVersion: apps/v1

kind: Deployment

metadata:

name: "nginx-deployment"

namespace: "nginx-operator-ns"

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

ports:

- containerPort: 8080

name: "nginx"

image: "nginx:latest"

and our assets.go will be

package assets

import (

"embed"

v1 "k8s.io/api/apps/v1"

"k8s.io/apimachinery/pkg/runtime"

"k8s.io/apimachinery/pkg/runtime/serializer"

)

var (

//go:embed manifests/*

manifests embed.FS

appsScheme = runtime.NewScheme()

appsCodecs = serializer.NewCodecFactory(appsScheme)

)

func init() {

if err := v1.AddToScheme(appsScheme); err != nil {

panic(err)

}

}

func GetDeploymentFromFile(name string) *v1.Deployment {

deploymentBytes, err := manifests.ReadFile(name)

if err != nil {

panic(err)

}

deploymentObject, err := runtime.Decode(

appsCodecs.UniversalDecoder(v1.SchemeGroupVersion),

deploymentBytes,

)

if err != nil {

panic(err)

}

return deploymentObject.(*v1.Deployment)

}

We would then use it like so

import "github.com/doppelganger113/nginx-operator/assets"

// Will be used in the reconciliation loop to retrieve deployment default config

deployment = assets.GetDeploymentFromFile("manifests/nginx_deployment.yaml")

COPY assets/ assets so that our default manifest is included in the build.We can also create the deployment manifest directly in code, the preference is up to you, we would write a create function like the following:

func createDefaultNginxDeployment() *v1.Deployment {

return &v1.Deployment{

ObjectMeta: metav1.ObjectMeta{

Name: "nginxDeployment",

Namespace: "nginxDeploymentNS",

},

Spec: v1.DeploymentSpec{

Replicas: ptr.To[int32](1),

Selector: &metav1.LabelSelector{

MatchLabels: map[string]string{"app": "nginx"},

},

Template: v2.PodTemplateSpec{

ObjectMeta: metav1.ObjectMeta{

Labels: map[string]string{"app": "nginx"},

},

Spec: v2.PodSpec{

Containers: []v2.Container{

{

Image: "nginx:latest",

Name: "nginx",

Ports: []v2.ContainerPort{

{

ContainerPort: 8080,

Name: "nginx",

},

},

},

},

},

},

},

}

}

Before proceeding to the reconciliation loop, let’s make a minor update of the ClusterRole by adding a tag in the controller file:

internal/controller/nginxoperator_controller.go

Find these boilerplate configurations above the Reconcile method

// +kubebuilder:rbac:groups=operator.example.com,resources=nginxoperators,verbs=get;list;watch;create;update;patch;delete

// +kubebuilder:rbac:groups=operator.example.com,resources=nginxoperators/status,verbs=get;update;patch

// +kubebuilder:rbac:groups=operator.example.com,resources=nginxoperators/finalizers,verbs=update

add one more at the end

// +kubebuilder:rbac:groups=apps,resources=deployments,verbs=get;list;watch;create;update;patch;delete

Now run make manifests, it will update the config/rbac/role.yaml by adding another apiGroup to the rules list

- apiGroups:

- apps

resources:

- deployments

verbs:

- create

- delete

- get

- list

- patch

- update

- watch

This group allows our Operator to execute the above actions on the deployments resources.

Reconciliation loop ➰

Now that we have our API defined both in manifests and in code, we can turn our attention to writing the actual logic

of our Operator in internal/controller/nginxoperator_controller.go method Reconcile.

What events to watch?

Configuration for what events to watch is setup in method SetupWithManager of the controller.

// SetupWithManager sets up the controller with the Manager.

func (r *NginxOperatorReconciler) SetupWithManager(mgr ctrl.Manager) error {

return ctrl.NewControllerManagedBy(mgr).

For(&operatorv1alpha1.NginxOperator{}).

Complete(r)

}

It’s watching only for our NginxOperator resource create/change events, let’s configure it to watch also the Deployment resource it owns.

// SetupWithManager sets up the controller with the Manager.

func (r *NginxOperatorReconciler) SetupWithManager(mgr ctrl.Manager) error {

return ctrl.NewControllerManagedBy(mgr).

For(&operatorv1alpha1.NginxOperator{}).

Owns(&v1.Deployment{}).

Complete(r)

}

Owns function won’t work for now because there is no way to distinguish that the resource belongs to the Operator.

We will solve this a bit later down the path.Reconcile method structure

Boilerplate gives us a nice starting point

func (r *NginxOperatorReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

logger := log.FromContext(ctx)

// TODO(user): your logic here

logger.Info("Received event")

return ctrl.Result{}, nil

}

Above code will consume the event successfully by returning nil.

Failed messages/events get back into the queue for re-processing. To prevent an infinite loop and overwhelming the controller, there is a rate-limit functionality and exponential backoff strategy, implemented by default.

Once the max-retry for the error is reached, it is dropped from the queue (unless the object is updated) and the error is logged. This prevents errors from being in the queue indefinitely and blocking it.

You can manually retry the request, for immediate retry return reconcile.Result{Requeue: true} or for a scheduled retry

reconcile.Result{RequeueAfter: time.Duration}.

Same as for error, the object won’t be retried indefinitely due to max retry limit of the queue.

You can ofcourse customize it to your liking in the same controller file

// SetupWithManager sets up the controller with the Manager.

func (r *NginxOperatorReconciler) SetupWithManager(mgr ctrl.Manager) error {

return ctrl.NewControllerManagedBy(mgr).

For(&operatorv1alpha1.NginxOperator{}).

WithOptions(controller.Options{

MaxConcurrentReconciles: 5, // Limits concurrency

RateLimiter: workqueue.NewItemExponentialFailureRateLimiter(

1*time.Second, // Base backoff duration

30*time.Second, // Max backoff duration

),

}).

Complete(r)

}

Test run

Make sure beforehand that you have created a test k8s cluster with kind

kind create cluster

# Make sure you are using the new kind context

k config get-contexts

#CURRENT NAME CLUSTER AUTHINFO NAMESPACE

# docker-desktop docker-desktop docker-desktop nginx-operator-ns

#* kind-kind kind-kind kind-kind

If you try to start the controller with make run, you will get an error because we are trying to watch

for NginxOperator resource which is not defined in Kubernetes.

2025-01-11T20:17:47+01:00 INFO setup starting manager

2025-01-11T20:17:47+01:00 INFO starting server {"name": "health probe", "addr": "[::]:8081"}

2025-01-11T20:17:47+01:00 INFO Starting EventSource {"controller": "nginxoperator", "controllerGroup": "operator.example.com", "controllerKind": "NginxOperator", "source": "kind source: *v1alpha1.NginxOperator"}

2025-01-11T20:17:47+01:00 INFO Starting Controller {"controller": "nginxoperator", "controllerGroup": "operator.example.com", "controllerKind": "NginxOperator"}

2025-01-11T20:17:47+01:00 ERROR controller-runtime.source.EventHandler if kind is a CRD, it should be installed before calling Start {"kind": "NginxOperator.operator.example.com", "error": "no matches for kind \"NginxOperator\" in version \"operator.example.com/v1alpha1\""}

sigs.k8s.io/controller-runtime/pkg/internal/source.(*Kind[...]).Start.func1.1

/Users/marko1886/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.18.4/pkg/internal/source/kind.go:71

k8s.io/apimachinery/pkg/util/wait.loopConditionUntilContext.func1

/Users/marko1886/go/pkg/mod/k8s.io/apimachinery@v0.30.1/pkg/util/wait/loop.go:53

k8s.io/apimachinery/pkg/util/wait.loopConditionUntilContext

/Users/marko1886/go/pkg/mod/k8s.io/apimachinery@v0.30.1/pkg/util/wait/loop.go:54

k8s.io/apimachinery/pkg/util/wait.PollUntilContextCancel

/Users/marko1886/go/pkg/mod/k8s.io/apimachinery@v0.30.1/pkg/util/wait/poll.go:33

sigs.k8s.io/controller-runtime/pkg/internal/source.(*Kind[...]).Start.func1

/Users/marko1886/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.18.4/pkg/internal/source/kind.go:64

To add our CRD from manifests, execute make install then run the controller again with make run you will see it

working

make run

/Users/marko1886/IdeaProjects/nginx-operator/bin/controller-gen rbac:roleName=manager-role crd webhook paths="./..." output:crd:artifacts:config=config/crd/bases

/Users/marko1886/IdeaProjects/nginx-operator/bin/controller-gen object:headerFile="hack/boilerplate.go.txt" paths="./..."

go fmt ./...

go vet ./...

go run ./cmd/main.go

2025-01-11T20:34:14+01:00 INFO setup starting manager

2025-01-11T20:34:14+01:00 INFO starting server {"name": "health probe", "addr": "[::]:8081"}

2025-01-11T20:34:14+01:00 INFO Starting EventSource {"controller": "nginxoperator", "controllerGroup": "operator.example.com", "controllerKind": "NginxOperator", "source": "kind source: *v1alpha1.NginxOperator"}

2025-01-11T20:34:14+01:00 INFO Starting Controller {"controller": "nginxoperator", "controllerGroup": "operator.example.com", "controllerKind": "NginxOperator"}

2025-01-11T20:34:14+01:00 INFO Starting workers {"controller": "nginxoperator", "controllerGroup": "operator.example.com", "controllerKind": "NginxOperator", "worker count": 1}

Let’s list custom resource definitions with the kubernetes cli

k get crds

# NAME CREATED AT

# nginxoperators.operator.example.com 2025-01-11T19:29:33Z

If you try to execute k get pods or k get deployments you will see nothing. This is because we haven’t created our

CR, just the definition of it. We have a sample file in our boilerplate at config/samples/operator_v1alpha1_nginxoperator.yaml that we need to

update to our schema.

apiVersion: operator.example.com/v1alpha1

kind: NginxOperator

metadata:

labels:

app.kubernetes.io/name: nginx-operator

app.kubernetes.io/managed-by: kustomize

name: nginxoperator-sample

spec:

port: 8080

replicas: 1

Then create this resource in our cluster

k apply -f config/samples/operator_v1alpha1_nginxoperator.yaml

# nginxoperator.operator.example.com/nginxoperator-sample created

The operator then prints the event:

2025-01-11T20:42:30+01:00 INFO Received event {"controller": "nginxoperator", "controllerGroup": "operator.example.com", "controllerKind": "NginxOperator", "NginxOperator": {"name":"nginxoperator-sample","namespace":"default"}, "namespace": "default", "name": "nginxoperator-sample", "reconcileID": "34773486-0f84-49f9-8d92-f3b942ce3775"}

Now we have a good starting point as we can run the controller whenever we want and test our code.

Designing the loop logic

There is no CRD info of the Operator or any other cluster info passed to the Reconcile function.

When an event hits the reconciliation loop it does not have any information on the state, thus the reconciliation function needs to query the actual state in order to compare it, this is known as a level-based triggering. Alternative to this is the edge-based triggering which carries all the information in the event itself. Trade off between is efficiency for reliability, and here we need reliability.

Ok, now to get back to writing our custom controller logic and maybe start first with pseudocode or comments to design our loop logic.

func (r *NginxOperatorReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

_ = log.FromContext(ctx)

// Retrieve our CRD configuration state

// if there is an error - fail and log so that the user creates it

myCrd, err := getMyCRD()

if err != nil {

retun nil, err

}

// Retrieve our related Deployment, if it does not exist - create it

resources, err := getRelatedResources()

if err != nil {

if err == NotFound {

resources := createNewResources()

} else {

// fail for unknown error

return nil, err

}

}

// Check the actual cluster state with the desired configuration state

// if different, correct state

if resources.Spec != myCrd.Spec {

updateResources(myCrd.Spec)

}

return ctrl.Result{}, nil

}

Implementation

Now that we have a general idea, we can start writing the actual code (this logic pretty much summarizes all controllers).

First, let’s just retrieve the Operator resource and with its configuration.

func (r *NginxOperatorReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

logger := log.FromContext(ctx)

logger.Info("Received event")

operatorResource := &operatorv1alpha1.NginxOperator{}

if err := r.Get(ctx, req.NamespacedName, operatorResource); err != nil {

if kerrors.IsNotFound(err) {

logger.Info("operator resource object not found")

return ctrl.Result{}, nil

}

logger.Error(err, "error getting operator resource object")

return ctrl.Result{}, err

}

return ctrl.Result{}, nil

}

If it is not found we don’t want to fail, we just ignore it, but if it’s something else we want to fail and notify

about this strange error. The kerrors "k8s.io/apimachinery/pkg/api/errors" gives us some nice utility functions for

error handling. The NamespacedName finds the exact resource by selecting through namespace first then name.

From Operator framework best practices:

“Operators shouldn’t hard code the namespaces they are watching. This should be configurable - having no namespace supplied is interpreted as watching all namespaces”

Now that we have our Operator resource and its configuration, let’s check the Deployment that we want to create/manage. We, again, do the same thing of checking if it already exists, but now if it does not exist - we create it.

func (r *NginxOperatorReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

// ... previous code

// Fetch our deployment

deployment := &v1.Deployment{}

if err := r.Get(ctx, req.NamespacedName, deployment); err != nil {

// Invert and fail if it's a different error kind to make code more clearer

if !kerrors.IsNotFound(err) {

return ctrl.Result{}, fmt.Errorf("failed fetching deployment: %w", err)

}

// Create new deployment

logger.Info("deployment does not exist, creating new one")

deployment = assets.GetDeploymentFromFile("manifests/nginx_deployment.yaml")

deployment.Name = req.Name

deployment.Namespace = req.Namespace

// Set owner reference

if err = ctrl.SetControllerReference(operatorResource, deployment, r.Scheme); err != nil {

return ctrl.Result{}, fmt.Errorf("failed setting controller reference: %w", err)

}

if createErr := r.Create(ctx, deployment); createErr != nil {

return ctrl.Result{}, fmt.Errorf("failed creating new deployment: %w", createErr)

}

}

logger.Info("retrieved deployment resource", "deployment", deployment)

return ctrl.Result{}, nil

}

You probably noticed that we have added the SetControllerReference function call, this call marks the Deployment resource to belong to this Operator. This is important first because of k8s garbage collection and secondly to track events on that resource, so we can react on them.

Owns(&v1.Deployment{})), but it didn’t work because it needed this part, somehow to know that a Deployment

belongs to this Operator.You can test this by commenting the SetControllerReference function call and verifying:

- Delete the deployment first (the previous one was not marked)

k delete deployment nginxoperator-sample - Run the Operator

make run# Check the current deployment port value k get deployments nginxoperator-sample -o yaml | grep containerPort # - containerPort: 8080 - Change the port with a patch command

k patch deployment nginxoperator-sample -n default --type=json -p='[{"op": "replace", "path": "/spec/template/spec/containers/0/ports/0/containerPort", "value": 9090}]' # deployment.apps/nginxoperator-sample patched - Fetch the port again, you should see it being now 9090. Now if you un-comment the code and re-do this whole flow you will see the port getting immediately reverted to 8080. Logs will also show more events incoming into the Operator.

Before we run this, check first if the deployment exists with k get deployments, after verifying there isn’t (or

after you delete it with k delete deployment nginxoperator-sample)

2025-01-14T16:37:41+01:00 INFO setup starting manager

2025-01-14T16:37:41+01:00 INFO starting server {"name": "health probe", "addr": "[::]:8081"}

2025-01-14T16:37:41+01:00 INFO Starting EventSource {"controller": "nginxoperator", "controllerGroup": "operator.example.com", "controllerKind": "NginxOperator", "source": "kind source: *v1alpha1.NginxOperator"}

2025-01-14T16:37:41+01:00 INFO Starting Controller {"controller": "nginxoperator", "controllerGroup": "operator.example.com", "controllerKind": "NginxOperator"}

2025-01-14T16:37:42+01:00 INFO Starting workers {"controller": "nginxoperator", "controllerGroup": "operator.example.com", "controllerKind": "NginxOperator", "worker count": 1}

2025-01-14T16:37:42+01:00 INFO Received event {"controller": "nginxoperator", "controllerGroup": "operator.example.com", "controllerKind": "NginxOperator", "NginxOperator": {"name":"nginxoperator-sample","namespace":"default"}, "namespace": "default", "name": "nginxoperator-sample", "reconcileID": "1b61eedf-6a72-4e03-b595-2d0de33b4047"}

2025-01-14T16:37:42+01:00 INFO deployment does not exist, creating new one {"controller": "nginxoperator", "controllerGroup": "operator.example.com", "controllerKind": "NginxOperator", "NginxOperator": {"name":"nginxoperator-sample","namespace":"default"}, "namespace": "default", "name": "nginxoperator-sample", "reconcileID": "1b61eedf-6a72-4e03-b595-2d0de33b4047"}

2025-01-14T16:37:42+01:00 INFO retrieved deployment resource {"controller": "nginxoperator", "controllerGroup": "operator.example.com", "controllerKind": "NginxOperator", "NginxOperator": {"name":"nginxoperator-sample","namespace":"default"}, "namespace": "default", "name": "nginxoperator-sample", "reconcileID": "1b61eedf-6a72-4e03-b595-2d0de33b4047", "deployment": {"namespace": "default", "name": "nginxoperator-sample"}}

In the case that the resource exists we want to check its values and compare if they correspond to the operator resource

configuration. Note that, in the case we create a deployment then we already set the proper values, so we need a way to

distinguish if we just created the deployment, or we only retrieved existing and want to check values, for that we can

just use a flag variable created and re-arrange our code a bit.

func (r *NginxOperatorReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

// ... previous code

// Fetch our deployment

deployment := &v1.Deployment{}

var created bool

if err := r.Get(ctx, req.NamespacedName, deployment); err != nil {

if kerrors.IsNotFound(err) {

// Create new deployment

logger.Info("deployment does not exist, creating new one")

deployment = assets.GetDeploymentFromFile("manifests/nginx_deployment.yaml")

deployment.Name = req.Name

deployment.Namespace = req.Namespace

if err = ctrl.SetControllerReference(operatorResource, deployment, r.Scheme); err != nil {

return ctrl.Result{}, fmt.Errorf("failed setting controller reference: %w", err)

}

if createErr := r.Create(ctx, deployment); createErr != nil {

return ctrl.Result{}, fmt.Errorf("failed creating new deployment: %w", createErr)

}

created = true

} else {

return ctrl.Result{}, fmt.Errorf("failed fetching deployment: %w", err)

}

}

logger.Info("retrieved deployment resource", "deployment", deployment)

if !created {

if operatorResource.Spec.Replicas != nil {

deployment.Spec.Replicas = operatorResource.Spec.Replicas

}

deployment.Spec.Template.Spec.Containers[0].Ports[0].ContainerPort = *operatorResource.Spec.Port

if err := r.Update(ctx, deployment); err != nil {

return ctrl.Result{}, fmt.Errorf("failed updating deployment: %w", err)

}

logger.Info("updated deployment")

}

return ctrl.Result{}, nil

}

In the above example when the deployment was not created, we set the fields to their default values and execute the update of the resource.

Though as mentioned, this is a quick example to get you started, in the next sections we will cover other important topics like error logging/reporting. When developing it’s a good practice to follow an iterative approach, get the basic functionality running, then add more atop of it.

Failure reporting 🚨

Errors can happen either on Operator or Operand, sometimes even on both. Operator will try to handle those errors, maybe even re-queue with a delay and try later, but there will be errors that will require manual inspection and possible intervention. For those reasons, our Operator needs to properly report those errors either via logging, status updates and events which we will cover next.

Logging errors

This is a general way we go with our apps like web servers, just standard logs of our app behavior and/or failure.

logger.Info("retrieved deployment resource", "deployment", deployment)

We can then retrieve them by executing kubectl to show us logs of a pod, for example:

k logs pod/my-pod

# Or to follow last 10 logs

k logs -f pod/my-pod --tail 10

You can use the grep tool to filter out based on a regex, and you can set log levels for more detailed logs if needed based on the Operator SDK logging configuration.

Important thing to know, logs in Kubernetes are temporary, the moment the pod in this example goes down, the logs are lost when the Garbage Collector (GC) goes through it.

Error events

Kubernetes events are a native Kubernetes resource like Pods or any other resource and are a good way to record important events related to that resource that happened in its lifecycle.

You will usually check events before going to check logs.

# Get all events

k get events

# List events for a specific pod and watch

k events --for pod/web-pod-13je7 --watch

# Get yaml description of the pod with events listed at the end

k describe my-pod

Example of retrieving all events of all resources

$ kubectl get events -n my-namespace

LAST SEEN TYPE REASON OBJECT MESSAGE

10s Normal Created pod/my-app-12345 Successfully created pod

10s Normal Scheduled pod/my-app-12345 Successfully assigned my-namespace/my-app-12345 to node-worker-1

8s Normal Pulled pod/my-app-12345 Successfully pulled image "nginx:latest"

7s Normal Created pod/my-app-12345 Created container my-app-container

7s Normal Started pod/my-app-12345 Started container my-app-container

6s Warning BackOff pod/my-app-12345 Back-off restarting failed container

5s Normal ScalingReplicaSet deployment/my-deployment Scaled up replica set my-deployment-67890 to 1

4s Normal SuccessfulCreate replicaset/my-deployment-67890 Created pod: my-deployment-67890-abcdef

3s Normal Reconciled customresource/my-resource Successfully reconciled custom resource

2s Warning ReconcileFailed customresource/my-resource Error during reconciliation: Failed to connect to external service

These messages include fields:

- Reason - Name of the event, starting upper-case and in camel-case style

- Type - Either Normal or Warning, can be used for filtering

- Message - Detailed description of the event reason

You can filter them with --field-selector type=Warning flags in the above command.

These messages should not be overflowed, but used logically to explain the lifecycle of the resource it relates to.

Let’s add some events to our example Operator from above, to start we need to first add a new field to the controller

Record record.EventRecorder:

type NginxOperatorReconciler struct {

client.Client

Scheme *runtime.Scheme

Record record.EventRecorder

}

then update the cmd/main.go file where we create the reconciler to add Record: mgr.GetEventRecorderFor("my-operator")

if err = (&controller.NginxOperatorReconciler{

Client: mgr.GetClient(),

Scheme: mgr.GetScheme(),

Record: mgr.GetEventRecorderFor("my-operator"),

}).SetupWithManager(mgr); err != nil {

setupLog.Error(err, "unable to create controller", "controller", "NginxOperator")

os.Exit(1)

}

then we can use it in the controller to access it and send events, for example we can add it on the line after we create the deployment for the first time:

// ...after r.Create

created = true

r.Record.Event(deployment, v2.EventTypeNormal, "Creating", "Deployment created")

Kubernetes will aggregate duplicate events and increase the counter, this way we don’t see duplicate messages one after the other and them overflowing.

It’s good to have messages be just fixed

strings and not with high cardinality to avoid creating massive lists of messages example of bad practice is using

something with high cardinality like an id fmt.Stringf("Deployment %d created", id)).

If a message can be dynamic with a variable that has 2 or 3 possible values, then it’s fine.

And maybe 1 more for when the deployment is updated

r.Record.Event(deployment, v2.EventTypeNormal, "Updated", "Updated deployment")

and after running you can retrieve the events and see them with k get events. Try deleting the deployment and see

what happens 😉

More information about creating/raising events can be found here book.kubebuilder.io raising events

Status updates

As mentioned previously, our Operator resource specification (CRD) has a spec field and a status. spec specifies the desired state and status serves to report the current status of the Operator.

The status field would have conditions like this

conditions:

- type: Ready

status: true

lastProbeTime: null

lastTransitionTime: 2018-01-01T00:00:00Z”

Because we haven’t declared these conditions in our NginxOperatorStatus struct in the first section (by default omitted),

we need to do that now. Update the api/v1alpha1/nginxoperator_types.go file to have the Conditions field.

// NginxOperatorStatus defines the observed state of NginxOperator

type NginxOperatorStatus struct {

// Conditions is a list of status condition updates

Conditions []metav1.Condition `json:"conditions"`

// INSERT ADDITIONAL STATUS FIELD - define observed state of cluster

// Important: Run "make" to regenerate code after modifying this file

}

Now run make to generate the code and manifests (config/crd/bases/operator.example.com_nginxoperators.yaml) based

on the file.

Then the functionality to set and update the Operator resource object with a status condition at the end of the reconcile loop function

meta.SetStatusCondition(&operatorResource.Status.Conditions, metav1.Condition{

Type: "OperatorReady",

Status: metav1.ConditionTrue,

LastTransitionTime: metav1.NewTime(time.Now()),

Reason: "OperatorSucceeded",

Message: "Operator ran successfully",

})

// Update the status

if err := r.Status().Update(ctx, operatorResource); err != nil {

return ctrl.Result{}, fmt.Errorf("failed to update status condition: %w", err)

}

return ctrl.Result{}, nil

Run the Operator, then let’s check to see if there are any conditions in our Operator resource status

k describe nginxoperator nginxoperator-sample

#Name: nginxoperator-sample

#Namespace: default

#Labels: app.kubernetes.io/managed-by=kustomize

# app.kubernetes.io/name=nginx-operator

#Annotations: <none>

#API Version: operator.example.com/v1alpha1

#Kind: NginxOperator

#Metadata:

# Creation Timestamp: 2025-01-15T13:49:39Z

# Generation: 1

# Resource Version: 136436

# UID: d5516554-e69b-4737-b87e-531d230b220e

#Spec:

# Port: 8080

# Replicas: 1

#Status:

# Conditions:

# Last Transition Time: 2025-01-15T13:49:39Z

# Message: Operator ran successfully

# Reason: OperatorSucceeded

# Status: True

# Type: OperatorReady

#Events: <none>

As you can see, the condition is written to our resource status field

Status:

Conditions:

Last Transition Time: 2025-01-15T13:49:39Z

Message: Operator ran successfully

Reason: OperatorSucceeded

Status: True

Type: OperatorReady

Generally, it’s good to have a couple of conditions that get updated instead of having an append only list of them. Constants on Reason strings can help with that

const (

ReasonOperatorSucceeded = "OperatorSucceeded",

ReasonOperatorDegraded = "OperatorDegraded",

)

so we avoid accidental mistakes when typing. Values of these strings should always be CamelCase.

The only standard name / value that exists now is “Upgradeable”, used by the OLM (which we will mention later).

We mentioned previously about Operator Lifecycle Management (OLM) that it manages our Operator lifecycle. With status updates we can mark our Operator if it’s not ready for an upgrade by creating the following condition in another resource object called OperatorCondition.

apiVersion: operators.coreos.com/v1

kind: OperatorCondition

metadata:

name: sample-operator

namespace: operator-ns

status:

conditions:

- type: Upgradeable

status: False

reason: "OperatorBusy"

message: "Operator is currently busy with a critical task"

lastTransitionTime: "2022-01-19T12:00:00Z”

We will need the use of the operator-lib library, details of it are located here.

Our controller would use it as follows:

import (

"github.com/operator-framework/api/pkg/operators/v2"

"github.com/operator-framework/operator-lib/conditions"

)

func (r *NginxOperatorReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

// ...

condition, err := conditions.InClusterFactory{r.Client}.NewCondition(v2.ConditionType(v2.Upgradeable))

if err != nil {

return ctrl.Result{}, err

}

err = condition.Set(

ctx,

metav1.ConditionTrue,

conditions.WithReason("OperatorUpgradeable"),

conditions.WithMessage("The operator is upgradeable"),

)

if err != nil {

return ctrl.Result{}, err

}

// ...

}

Similar as before, this will update the status of the new resource object for OLM to know if our Operator is upgradable or not.

If for some reason there is a necessity to override this and force the OLM to ignore the Upgradeable=False field, then an administrator can use the override field to override the values of the status like the following:

apiVersion: operators.coreos.com/v1

kind: OperatorCondition

metadata:

name: sample-operator

namespace: operator-ns

spec:

overrides:

- type: Upgradeable

status: True

reason: "OperatorIsStable"

message: "Forcing an update due to bug"

status:

conditions:

- type: Upgradeable

status: False

reason: "OperatorBusy"

message: "Operator is currently busy with a critical task"

lastTransitionTime: "2022-01-19T12:00:00Z”

As mentioned, more on OLM later.

Metrics reporting 📈

Metrics are crucial part of monitoring in Kubernetes, this will help us

This data can be scrapped by tools like Prometheus or OpenTelemetry and visually showed through Grafana.

The boilerplate of the project that was initialized with operator-sdk (in cmd/main.go) already has the code and dependencies

for exposing metrics on /metrics endpoint on port 8080. This allows us to focus on the core logic and not needing

to handle metrics as described in the Prometheus guide.

Implementation relies on sigs.k8s.io/controller-runtime library that this functionality is built in provides a couple of metrics already exposed and prefixed with controller_runtime_. Some of them are:

- controller_runtime_reconcile_errors_total - counter for returned errors by the Reconcile function

- controller_runtime_reconcile_time_seconds_bucket - a histogram showing latency of individual reconciliation attemps

- controller_runtime_reconcile_time_reconcile_total - Total number of reconciliations

The Operator SDK recommends following the RED method, which focuses on the following:

- Rate - How many requests per second is happening, useful for discovering if there is a hot loop

- Errors - Shows the number of failures, can indicate that more error handling is required or another problem

- Duration - How much time it takes the Operator to complete its work (latency). Slow performance can degrade cluster health

These metrics can be used to define the Operator service-level objectives (SLO).

We can add a simple reconcile counter metric, first let’s create a internal/controllers/metrics/metrics.go file to

initialize and expose functions

package metrics

import (

"github.com/prometheus/client_golang/prometheus"

"sigs.k8s.io/controller-runtime/pkg/metrics"

)

var ReconcilesTotal = prometheus.NewCounter(prometheus.CounterOpts{

Name: "nginxoperator_reconciles_total",

Help: "Number of total reconciliation attempts",

})

func init() {

metrics.Registry.MustRegister(ReconcilesTotal)

}

Then we just call the Inc function to increment our counter for every Reconcile execution.

import "github.com/sample/nginx-operator/controllers/metrics"

func (r *NginxOperatorReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

metrics.ReconcilesTotal.Inc()

// ...

}

More info at kubebuilder metrics

Leader election 🙋♀️

During the lifetime of an Operator there is a chance that there might be duplicates of the same Operator, for example during an upgrade. When that happens, we don’t want to have multiple Operators of the same type contending over the same resources and to avoid that we use leader election, so that only 1 Operator would get selected as the one to do the actual work (reconciliation).

There are 2 types of leader elections to choose, both with pros and cons

- Leader with lease - The leader periodically renews it’s leader lease and gives up leadership when it cannot renew it. This allows for easier transitions between leaders, but can lead to a split-brain problem in certain conditions

- Leader for life - The leader pod only gives up its leader position when it is deleted (garbage collected). This avoids situation where multiple replicas compete for leadership, however this method can lead to a longer wait for election and can max at 5min if node errors occur that prolong the GC.

Default SDK implementation is the leader-with-lease, though we need to enable it with the flag --leader-elect.

mgr, err := ctrl.NewManager(ctrl.GetConfigOrDie(), ctrl.Options{

Scheme: scheme,

Metrics: metricsServerOptions,

WebhookServer: webhookServer,

HealthProbeBindAddress: probeAddr,

LeaderElection: enableLeaderElection,

LeaderElectionID: "a7e021da.example.com",

// LeaderElectionReleaseOnCancel defines if the leader should step down voluntarily

// when the Manager ends. This requires the binary to immediately end when the

// Manager is stopped, otherwise, this setting is unsafe. Setting this significantly

// speeds up voluntary leader transitions as the new leader don't have to wait

// LeaseDuration time first.

//

// In the default scaffold provided, the program ends immediately after

// the manager stops, so would be fine to enable this option. However,

// if you are doing or is intended to do any operation such as perform cleanups

// after the manager stops then its usage might be unsafe.

// LeaderElectionReleaseOnCancel: true,

})

As mentioned above, the leader-with-lease pain point is that two leaders might get elected at the same time in certain scenario with the clock skew between servers. This is because the Kubernetes client looks at timestamps to determine renewing of the lease. For example, if the current leader is on Node A where time is ok and the other duplicate Operator is on Node B which has a clock skew and is behind in time for 1h, it can cause issues when comparing timestamps , and we end up with either very long delays for selecting a leader or we end up with 2 leaders at the same time.

To remedy this, you need to adjust clocks on all nodes and modify the RenewDeadline and LeaseDuration to set

the LeaseDuration to be a multiple of RenewDeadline when creating new manager with ctrl.NewManager.

More discussion on this topic can be found in this PR.

Building and deploying 📦

Since everything in Kubernetes is a container, we also need to build our agent to be a container to be used in Kubernetes.

Change in Makefile the name of the docker image to be generated from IMG ?= controller:latest to

IMG ?= nginxoperator:v0.1 or you can directly change it when calling the command.

IMG=nginxoperator:v0.1 make docker-build

COPY assets assets/, otherwise the build will fail.make build

# ...

make docker-build

# ...

# List the docker images

docker images

#REPOSITORY TAG IMAGE ID CREATED SIZE

#nginxoperator v0.1 6369d2535ece 10 minutes ago 100MB

Hopefully you’ve created a test cluster with kind before, but if you haven’t do it now

kind create cluster

# Verify that you are on the new kind cluster

k config current-cluster

Then you can load the docker image to the kind cluster without needing to publish it publicly

kind load docker-image nginxoperator:v0.1

Now we can deploy it with

make deploy

We can then observe and see when running the following commands the state in our Kubernetes cluster

k get namespaces

#NAME STATUS AGE

#default Active 5d23h

#kube-node-lease Active 5d23h

#kube-public Active 5d23h

#kube-system Active 5d23h

#local-path-storage Active 5d23h

#nginx-operator-system Active 13s

k get all -n nginx-operator-system

#NAME READY STATUS RESTARTS AGE

#pod/nginx-operator-controller-manager-786bfb8df6-fnb9m 1/1 Running 0 22m

#

#NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

#service/nginx-operator-controller-manager-metrics-service ClusterIP 10.96.199.70 <none> 8443/TCP 22m

#

#NAME READY UP-TO-DATE AVAILABLE AGE

#deployment.apps/nginx-operator-controller-manager 1/1 1 1 22m

#

#NAME DESIRED CURRENT READY AGE

#replicaset.apps/nginx-operator-controller-manager-786bfb8df6 1 1 1 22m

k logs pod/nginx-operator-controller-manager-786bfb8df6-fnb9m -n nginx-operator-system

# ...logs

k get nginxoperators

#NAME AGE

#nginxoperator-sample 25h

k get deployments

#NAME READY UP-TO-DATE AVAILABLE AGE

#nginxoperator-sample 1/1 1 1 4s

k get pods

#NAME READY STATUS RESTARTS AGE

#nginxoperator-sample-cc4566ddc-7ftsw 1/1 Running 0 7m6s

k describe nginxoperators/nginxoperator-sample

#Name: nginxoperator-sample

#Namespace: default

#Labels: app.kubernetes.io/managed-by=kustomize

# app.kubernetes.io/name=nginx-operator

#Annotations: <none>

#API Version: operator.example.com/v1alpha1

#Kind: NginxOperator

#Metadata:

# Creation Timestamp: 2025-01-15T13:49:39Z

# Generation: 1

# Resource Version: 136436

# UID: d5516554-e69b-4737-b87e-531d230b220e

#Spec:

# Port: 8080

# Replicas: 1

#Status:

# Conditions:

# Last Transition Time: 2025-01-15T13:49:39Z

# Message: Operator ran successfully

# Reason: OperatorSucceeded

# Status: True

# Type: OperatorReady

#Events: <none>

And if we delete the deployment, we will see it being re-started again

k delete deployment nginxoperator-sample

#deployment.apps "nginxoperator-sample" deleted

k get deployments

#NAME READY UP-TO-DATE AVAILABLE AGE

#nginxoperator-sample 1/1 1 1 7s

To remove an Operator installation we can run make undeploy

Operator Lifecycle Manager (OLM) ♻️

Operator Lifecycle Manager (OLM) is a component of the operator framework and extends Kubernetes to provide a declarative way to install, manage, and upgrade Operators on a cluster.

To quote from the website:

“This project is a component of the Operator Framework, an open source toolkit to manage Kubernetes native applications, called Operators, in a streamlined and scalable way.”

Up until now we have built and deployed our operator locally, but for public availability and production usage we need to do more steps to align with the standard or framework.

OLM is just a bunch of Pods, Deployments, CRDs, RoleBindings, ServiceAccounts and namespaces for managing our Operators for smoother upgrading and management. In order to use OLM we need to install it into our cluster.

Before we start with installing OLM let’s purge the cluster and re-create

kind delete cluster

kind create cluster

Now to install OLM run

operator-sdk olm install

# This might take a while...

# Check all resources under olm namespace

k get all -n olm

Some bonus functionality for olm from the operator-sdk

operator-sdk olm status

operator-sdk olm uninstall

Building a bundle for OLM

When building for OLM, we want to run make bundle that will produce an image with additional metadata for OLM to use

in order to be able to work with our image.

After running the make bundle command you will get prompted to enter some information about your Operator, enter all

prompts and you should get INFO[0000] All validation tests have completed successfully. Notice that we have now

three additional directories generated within the bundle/ directory:

- tests - Configuration files for running scorecard tests for validating the Operator’s bundle.

- metadata - Contains annotations.yaml file that provides OLM with the Operator version and its dependencies. It reflects values of labels in

bundle.Dockerfile - manifests - Contains manifests of the CRD and metric-related resources

Once the bundle manifests have been built, we run the make bundle-build

make bundle-build with the BUNDLE_IMG

variable set, something like docker.io/myregistry/nginx-bundle:v0.0.1.docker images

#REPOSITORY TAG IMAGE ID CREATED SIZE

#example.com/nginx-operator-bundle v0.0.1 92ed25cd4913 6 seconds ago 80.1kB

For the next steps of publishing and running a bundle you would need to have somewhere hosting of Docker images in order to work.

# Push the bundled image

make bundle-push

# Pull and run the bundled image

operator-sdk run bundle

Operator can be uninstalled by running the cleanup command

operator-sdk cleanup nginx-operator

Testing Operators 🔬

We won’t go into details about testing Operators, the generated test/e2e/ directory contains boilerplate for testing

the Operator. You will see that the e2e tests are doing exactly what we were doing in this article when running the

operator and inspecting the results.

Small piece of code from tests to show how verification looks like.

verifyControllerUp := func() error {

// Get pod name

cmd = exec.Command("kubectl", "get",

"pods", "-l", "control-plane=controller-manager",

"-o", "go-template={{ range .items }}"+

"{{ if not .metadata.deletionTimestamp }}"+

"{{ .metadata.name }}"+

"{{ \"\\n\" }}{{ end }}{{ end }}",

"-n", namespace,

)

podOutput, err := utils.Run(cmd)

ExpectWithOffset(2, err).NotTo(HaveOccurred())

podNames := utils.GetNonEmptyLines(string(podOutput))

if len(podNames) != 1 {

return fmt.Errorf("expect 1 controller pods running, but got %d", len(podNames))

}

controllerPodName = podNames[0]

ExpectWithOffset(2, controllerPodName).Should(ContainSubstring("controller-manager"))

// Validate pod status

cmd = exec.Command("kubectl", "get",

"pods", controllerPodName, "-o", "jsonpath={.status.phase}",

"-n", namespace,

)

status, err := utils.Run(cmd)

ExpectWithOffset(2, err).NotTo(HaveOccurred())

if string(status) != "Running" {

return fmt.Errorf("controller pod in %s status", status)

}

return nil

}

How does an Operator handle Concurrency?

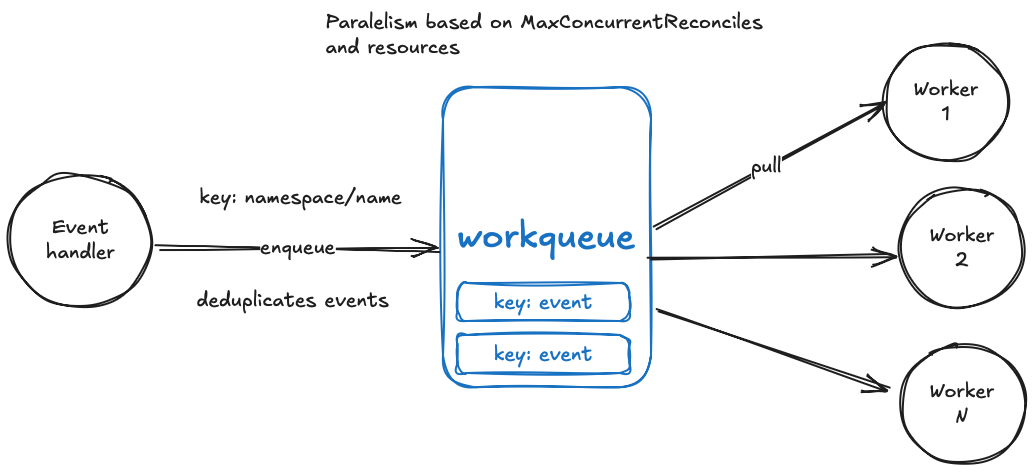

You, like me, would be probably interested what happens when there is an influx or events, how is concurrency handled and will there be a data race or collision? That probably let you down the alley to find about a setting called MaxConcurrentReconciles. This sets the number of workers that concurrently process events based on the resource and by default the value is 1. The default value obviously shows that we will always process one at a time and sequentially, though the most important bit here is actually the “based on the resource”. This means that if we have resource A and B managed by the operator and a MaxConcurrentReconciles count of 2, one worker will always take resource A and the other would take resource B processing them concurrently.

“The reconciler internal queue prevents concurrent updates for the same resource name, but still allows concurrent updates for different resources when MaxConcurrentReconciles is greater than 1.” 2

Another important bit that helps with the concurrency issue is the workqueue that provides the following queue features:

- Fair: items processed in the order in which they are added.

- Stingy: a single item will not be processed multiple times concurrently, and if an item is added multiple times before it can be processed, it will only be processed once.

- Multiple consumers and producers. In particular, it is allowed for an item to be re-enqueued while it is being processed.

- Shutdown notifications.

With most important for our talk being the second one - Stingy which handles deduplication of events. The workers pull thread safe from the queue which has separate events by resource and deduplicated and after the reconcile finishes the data from queue is marked as consumed and removed. One article covers this same topic a bit more in details: Kubernetes Operators Concurrency.

Keep in mind that reliability is higher priority than speed.

Finalizers

Finalizers are used when you have external or not owned resources that you want to delete (cleanup) when the parent resource is being deleted.

The flow goes as follows:

- User issues a delete request on a resource

kubectl delete mycustomresource my-instance - Kubernetes marks the resource for deletion

- The API server sets the deletionTimestamp but does not delete the resource immediately

- The resource remains in the system until all Finalizers are removed

- Operator handles cleanup

- The Operator detects that the deletionTimestamp is set

- Runs the cleanup logic

- Removes the finalizer from the resource

- Once the finalizer is removed, the API deletes the resource from the system

More about finalizers can be found here

Operator Hub 🛒

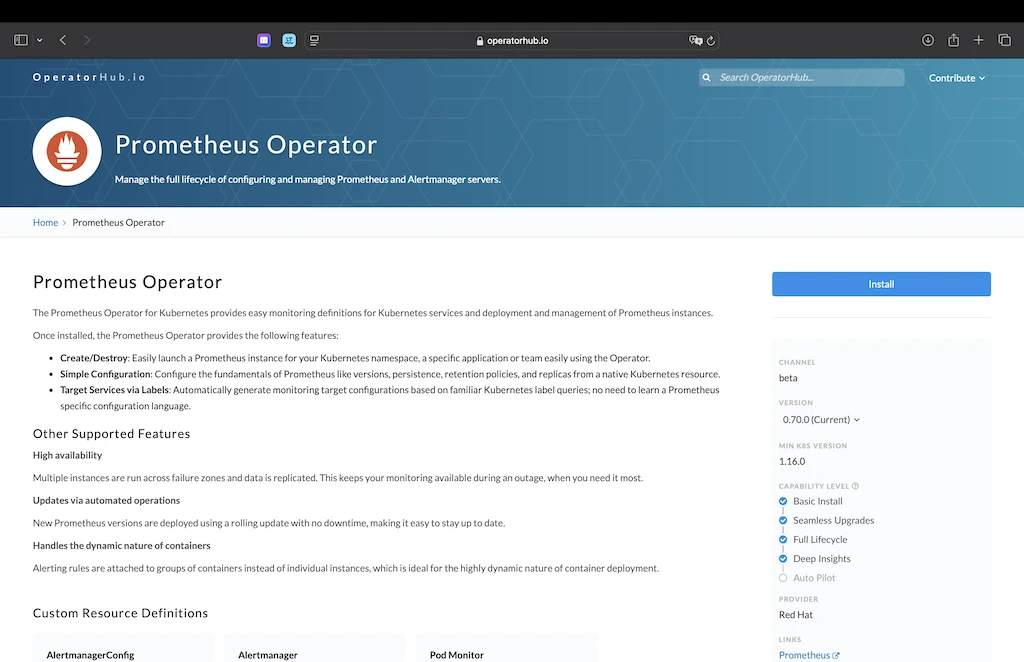

Every great open source software has a dedicated community and so does the Operator Framework in the form of a website open catalog of existing Operators - Operator Hub

Feel free to check it out as there might already have an Operator that you might need in your Kubernetes cluster spanning through many categories like Big data, Monitoring, Database, Networking, AI/ML and many more.

Each operator has a proper documentation page, with their capability model on the right and version of the operator and a simple installation instruction.

Ending notes

We went from designing functionality and API of our Operator to coding logic and running it in our Kubernetes environment, this hands-on should give you a good idea on building operators and understanding their usage which is basically “automating kubernetes resource management” with the showcase how a standardized framework can help you make an Operator that much easier and faster.

Though always, before considering building an Operator, check the Operator Hub as it might already have what you are looking for.

For the end, some good references for continuing forward with learning about Operators: